Artificial Intelligence is often described as a “black box,” where decisions are made but rarely understood.

However, Decision Tree models break this myth by offering transparent, rule-based predictions that humans can easily interpret.

From credit approval systems to machine fault detection, Decision Trees help organizations understand why a decision was made—not just what decision was made.

But what happens when a single decision tree is not enough?

That’s where Random Forest comes in—a powerful ensemble technique that combines multiple decision trees to improve accuracy, stability, and robustness.

In this article, we will deeply explore:

- How Decision Tree models work

- Why rule-based prediction is powerful

- How Random Forest combines multiple decisions

- Real-world and industrial use cases

- Advantages, limitations, and best practices

This guide is written for engineers, data scientists, and industry professionals who want practical clarity—not theory overload.

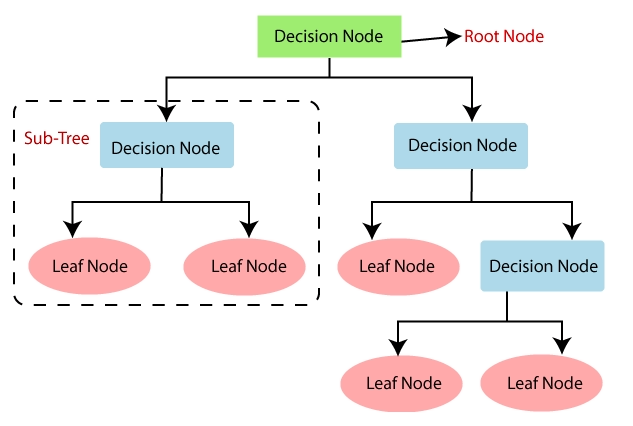

What Are Decision Tree Models? (Practical View)

A Decision Tree model works exactly the way engineers troubleshoot machines.

We don’t calculate complex formulas in our head.

We ask questions step by step:

- Is vibration high?

- Is temperature above limit?

- Is load normal or overloaded?

That is rule-based prediction.

In Decision Tree Models – Rule-Based Prediction and Random Forest – Multiple Decisions Combined, this rule-based logic becomes the foundation of prediction.

Simple Example from Industry:

If vibration RMS > 6.5 mm/s

AND temperature > 75°C

THEN machine condition = FAULT

This is why decision trees are easy to explain to:

- Maintenance teams

- Plant managers

- Auditors

How Rule-Based Prediction Works in Real Projects

In real projects, I’ve used Decision Trees mainly for:

- Initial fault classification

- Baseline logic building

- Explaining AI output to non-technical teams

The biggest advantage of Decision Tree Models – Rule-Based Prediction and Random Forest – Multiple Decisions Combined is clarity.

You don’t need to “trust the model blindly”.

You can see the logic.

Real Use Cases of Decision Tree Models

1. Fault Prediction

- Vibration

- Temperature

- Current / Load

Decision Trees quickly show which parameter caused the fault.

2. Energy Monitoring

- Peak vs normal consumption

- Shift-wise classification

- Abnormal energy usage detection

3.Production Analysis

- Good vs bad production batches

- Cycle time classification

- Downtime reason identification

Where Single Decision Trees Fail (From Experience)

This is important.

In real data:

- Sensors are noisy

- Values fluctuate

- One bad data point can change the result

I’ve personally seen cases where:

- One sensor spike

- Completely changed the decision tree output

This is the biggest limitation of a single tree.

That’s exactly why Random Forest exists.

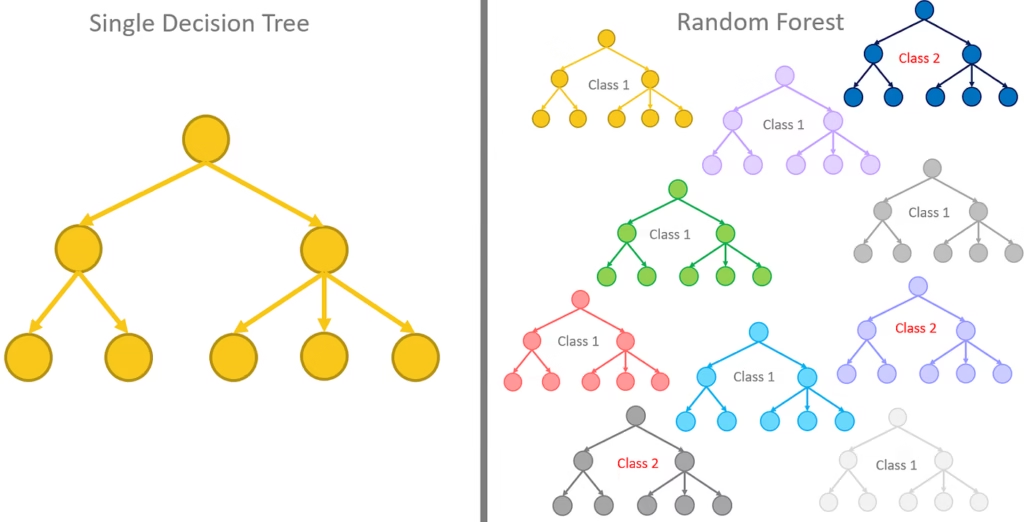

Random Forest – Multiple Decisions Combined

Random Forest is simply a smarter extension of Decision Tree Models – Rule-Based Prediction and Random Forest – Multiple Decisions Combined.

Instead of trusting one decision tree, Random Forest:

- Builds many decision trees

- Each tree looks at data differently

- Final decision is taken by majority vote

Think of it like asking 10 experienced engineers instead of 1.

How Random Forest Works (Simple Explanation)

- Random data samples are selected

- Multiple decision trees are created

- Each tree gives its own prediction

- Final output = combined decision

This reduces risk and improves accuracy.

Industrial Example (From Practice)

Inputs:

- Vibration RMS

- Peak acceleration

- Temperature

- Motor current

- RPM

Output:

- Normal

- Warning

- Fault

Some trees focus on vibration, others on temperature or load.

The final result is balanced and stable.

This is why Decision Tree Models – Rule-Based Prediction and Random Forest – Multiple Decisions Combined work so well in industrial AI systems.

Decision Tree vs Random Forest (Honest Comparison)

| Factor | Decision Tree | Random Forest |

|---|---|---|

| Explainability | Very High | Medium |

| Accuracy | Medium | High |

| Noise handling | Poor | Excellent |

| Industrial readiness | Limited | Strong |

When to Use What (My Recommendation)

Use Decision Tree When:

- You want explainability

- You’re building baseline logic

- Stakeholders want transparency

Use Random Forest When:

- Data is noisy

- Accuracy matters

- System is running in production

In most real systems, I start with Decision Tree, then move to Random Forest.

🏗️ Typical Industrial Architecture

Sensors → MQTT → Node-RED → Database

→ AI Model (Decision Tree / Random Forest)

→ Grafana Dashboard

This architecture is common in:

- Energy Monitoring Systems

- Condition Based Monitoring

- Predictive Maintenance solutions

Best Practices (From Experience)

- Start simple, then optimize

- Avoid very deep trees

- Use feature importance analysis

- Retrain models periodically

- Always validate with real plant data

Conclusion

Decision Tree Models – Rule-Based Prediction and Random Forest – Multiple Decisions Combined represent one of the most practical forms of machine learning.

Decision Trees help us understand the problem.

Random Forest helps us solve it reliably.

In real industrial environments, this combination offers the perfect balance between logic, accuracy, and trust.

For more practical AI, automation, Node-RED, and Grafana insights —

TechKnowledge.in focuses on what actually works on the shop floor.